Letters of recommendation (LORs) are essential within academic medicine, affecting a number of important decisions regarding advancement, yet these letters take significant amounts of time and labor to prepare. The use of generative artificial intelligence (AI) tools, such as ChatGPT, are gaining popularity for a variety of academic writing tasks and offer an innovative solution to relieve the burden of letter writing. It is yet to be determined if ChatGPT could aid in crafting LORs, particularly in high-stakes contexts like faculty promotion. To determine the feasibility of this process and whether there is a significant difference between AI and human-authored letters, we conducted a study aimed at determining whether academic physicians can distinguish between the two.

A quasi-experimental study was conducted using a single-blind design. Academic physicians with experience in reviewing LORs were presented with LORs for promotion to associate professor, written by either humans or AI. Participants reviewed LORs and identified the authorship. Statistical analysis was performed to determine accuracy in distinguishing between human and AI-authored LORs. Additionally, the perceived quality and persuasiveness of the LORs were compared based on suspected and actual authorship.

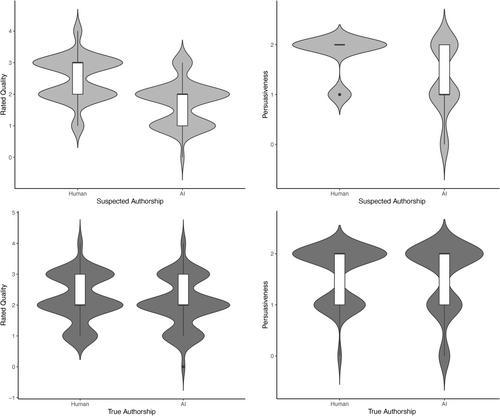

A total of 32 participants completed letter review. The mean accuracy of distinguishing between human- versus AI-authored LORs was 59.4%. The reviewer's certainty and time spent deliberating did not significantly impact accuracy. LORs suspected to be human-authored were rated more favorably in terms of quality and persuasiveness. A difference in gender-biased language was observed in our letters: human-authored letters contained significantly more female-associated words, while the majority of AI-authored letters tended to use more male-associated words.

Participants were unable to reliably differentiate between human- and AI-authored LORs for promotion. AI may be able to generate LORs and relieve the burden of letter writing for academicians. New strategies, policies, and guidelines are needed to balance the benefits of AI while preserving integrity and fairness in academic promotion decisions.