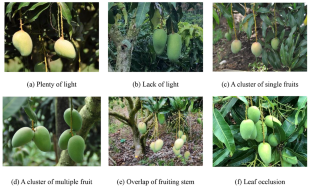

Due to the fact that the color of mango peel is similar to that of leaf, and there are many fruits on one stem, it is difficult to locate the picking point when using robots to pick fresh mango in the natural environment. A multi-task learning method named YOLOMS was proposed for mango recognition and rapid location of main stem picking points. Firstly, the backbone network of YOLOv5s was optimized and improved by using the RepVGG structure. The loss function of original YOLOv5s was improved by introducing the loss function of Focal-EIoU. The improved model could accurately identify mango and fruit stem in complex environment without decreasing reasoning speed. Secondly, the subtask of mango stem segmentation was added to the improved YOLOv5s model, and the YOLOMS multi-task model was constructed to obtain the location and semantic information of the fruit stem. Finally, the strategies of main fruit stem recognition and picking point location were put forward to realize the picking point location of the whole cluster mango. The images of mangoes on trees in natural environment were collected to test the performance of the YOLOMS model. The test results showed that the mAP and Recall of mango fruit and stem target detection by YOLOMS model were 82.42% and 85.64%, respectively, and the MIoU of stem semantic segmentation reached to 82.26%. The recognition accuracy of mangoes was 92.19%, the success rate of stem picking location was 89.84%, and the average location time was 58.4 ms. Compared with the target detection models of Yolov4, Yolov5s, Yolov7-tiny and the target segmentation models of U-net, PSPNet and DeepLab_v3+, the improved YOLOMS model had significantly better performance, which could quickly and accurately locate the picking point. This research provides technical support for mango picking robot to recognize the fruit and locate the picking point.