One central consideration in health professions education (HPE) is to ensure we are making sound and justifiable decisions based on the assessment instruments we use on health professionals. To achieve this goal, HPE assessment researchers have drawn on Kane's argument-based framework to ascertain the validity of their assessment tools. However, the original four-inference model proposed by Kane – frequently used in HPE validation research – has its limitations in terms of what each inference entails and what claims and sources of backing are housed in each inference. The under-specification in the four-inference model has led to inconsistent practices in HPE validation research, posing challenges for (i) researchers who want to evaluate the validity of different HPE assessment tools and/or (ii) researchers who are new to test validation and need to establish a coherent understanding of argument-based validation.

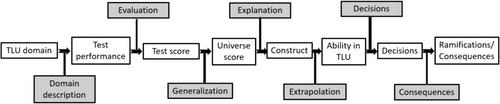

To address these identified concerns, this article introduces the expanded seven-inference argument-based validation framework that is established practice in the field of language testing and assessment (LTA). We explicate (i) why LTA researchers experienced the need to further specify the original four Kanean inferences; (ii) how LTA validation research defines each of their seven inferences and (iii) what claims, assumptions and sources of backing are associated with each inference. Sampling six representative validation studies in HPE, we demonstrate why an expanded model and a shared disciplinary validation framework can facilitate the examination of the validity evidence in diverse HPE validation contexts.

We invite HPE validation researchers to experiment with the seven-inference argument-based framework from LTA to evaluate its usefulness to HPE. We also call for greater interdisciplinary dialogue between HPE and LTA since both disciplines share many fundamental concerns about language use, communication skills, assessment practices and validity in assessment instruments.